- Offer

- Services

- Assignment Help

- Homework Help

- Coursework Help

- Dissertation Help

- Programming Assignment Help

- Programming Language Assignment Help

- Adobe InDesign Assignment Help

- Advanced Network Design Assignment Help

- Artificial Intelligence Assignment Help

- Assembly Language Assignment Help

- Computer Programming Assignment Help

- C Assignment Help

- Coding Assignment Help

- Data Structure Assignment Help

- IT Assignment Help

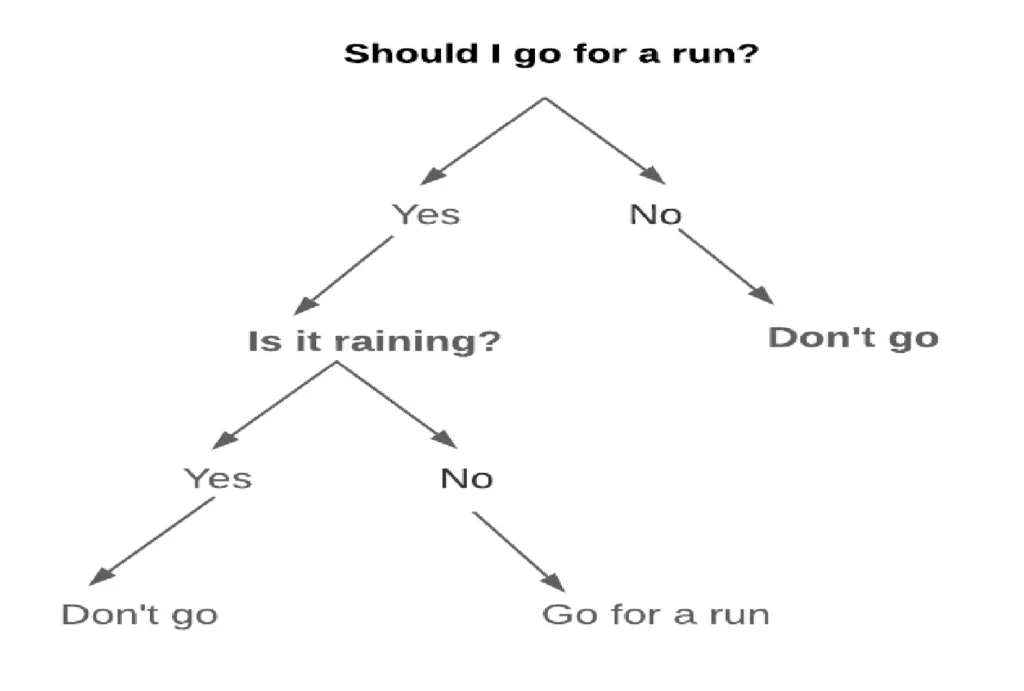

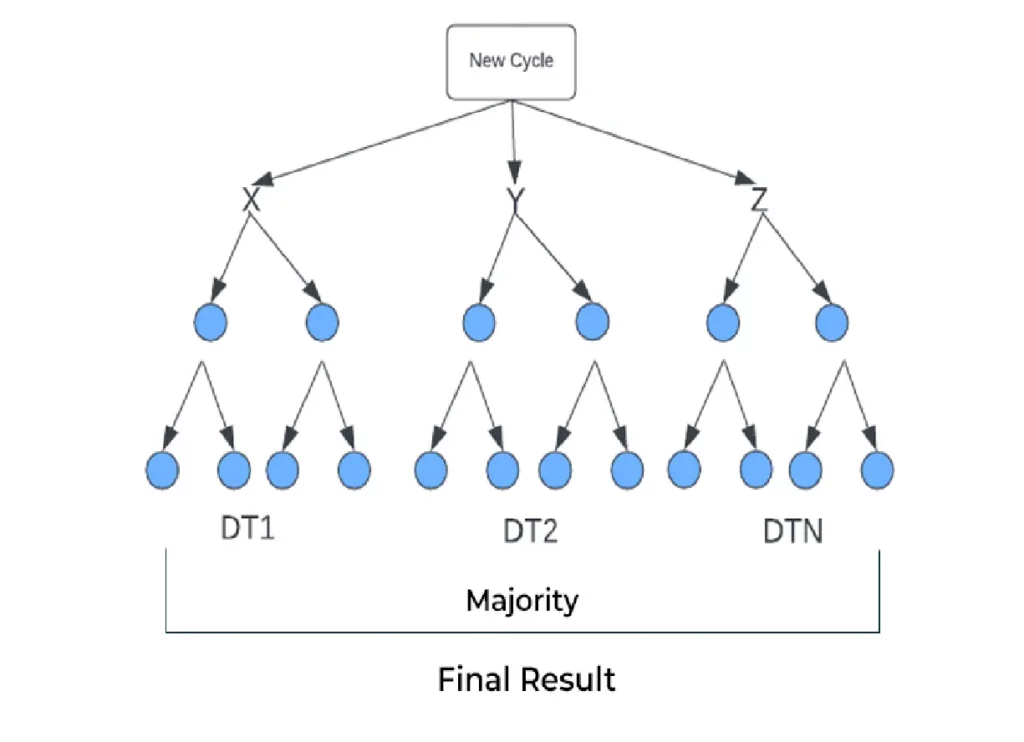

- Machine Learning Assignment Help

- ODOO Assignment Help

- R Programming Assignment Help

- UML Assignment Help

- Web Designing Assignment Help

- Big Data Assignment Help

- Data Mining Assignment Help

- Matlab Assignment Help

- Finance Assignment Help

- Research Paper Writing

- Engineering Assignment Help

- Essay Writing Services

- Marketing Plan

- Capstone Project Writing

Services in Australia

- Experts

- Blogs

- Reviews

4.9/5

+61 480 020 208

Order Now